One of the reasons why political discussions in the West rarely go very far is because the theory of “brainwashing” has near-universal acceptance. The very notion of working out a disagreement through dialogue is seen as ridiculous — no matter what you believe, the other side is hopelessly “brainwashed” by immeasurably powerful media, so what’s the point? Faith in this theory defeats many Communists before they even begin trying.

Whenever I decisively oppose the propagation of this theory of “brainwashing,” [1] its fervent advocates bray back “It’s backed by science!”. But this isn’t true! As the peer-reviewed, open-access article reproduced below clearly demonstrates, the theory of “brainwashing” is not backed by science:

“There is no such thing as ‘brainwashing.’ Information is not passed from brain to brain like a virus is passed from body to body. […] The virus metaphor, all too popular during the COVID-19 pandemic — think of the ‘infodemic’ epithet — is misleading. It is reminiscent of outdated models of communication (e.g., ‘hypodermic needle model’) assuming that audiences were passive and easily swayed by pretty much everything they heard or read. […] These premises are at odds with what we know about human psychology and clash with decades of data from communication studies.”

I do understand that, precisely for the reasons laid out by these researchers, merely putting this knowledge out there won’t and can’t alter people’s attachment to the narrative — it has its roots elsewhere. That said, it’s brilliant work, and we hope it becomes far better known!

— R. D.

Contents

- Abstract

- 1. Introduction

- 2. Misconceptions About the Prevalence and Circulation of Misinformation

- 2.1. Misinformation Is Just a Social Media Problem

- 2.2. The Internet is Rife With Misinformation

- 2.3. Falsehoods Spread Faster than the Truth

- 3. Misconceptions About the Impact and Reception of Misinformation

- 3.1. People Believe Everything They See on the Internet

- 3.2. A Large Number of People are Misinformed

- 3.3. Misinformation Has a Strong Influence on People’s Behavior

- 4. Conclusion

- References

Abstract

Alarmist narratives about online misinformation continue to gain traction despite evidence that its prevalence and impact are overstated. Drawing on research examining the use of big data in social science and reception studies, we identify six misconceptions about misinformation and highlight the conceptual and methodological challenges they raise. The first set of misconceptions concerns the prevalence and circulation of misinformation.

- First, scientists focus on social media because it is methodologically convenient, but misinformation is not just a social media problem.

- Second, the internet is not rife with misinformation or news, but with memes and entertaining content.

- Third, falsehoods do not spread faster than the truth; how we define (mis)information influences our results and their practical implications.

The second set of misconceptions concerns the impact and the reception of misinformation.

- Fourth, people do not believe everything they see on the internet: the sheer volume of engagement should not be conflated with belief.

- Fifth, people are more likely to be uninformed than misinformed; surveys overestimate misperceptions and say little about the causal influence of misinformation.

- Sixth, the influence of misinformation on people’s behavior is overblown as misinformation often “preaches to the choir.”

To appropriately understand and fight misinformation, future research needs to address these challenges.

1. Introduction

Concerns about misinformation are rising the world over. Today, Americans are more concerned about misinformation than sexism, racism, terrorism, or climate change. [2] Most of them (60%) think that “made-up news” had a major impact on the 2020 election. [3] Internet users are more afraid of fake news than online fraud and online bullying. [4] Numerous scientific and journalistic articles claim that online misinformation is the source of many contemporary sociopolitical issues while neglecting deeper factors such as the decline of trust in institutions [5] or in the media. [6]

Yet, the scientific literature is clear. Unreliable news, including false, deceptive, low-quality, or hyper partisan news, represents a minute portion of people’s information diet; [7] most people do not share unreliable news; [8] on average people deem fake news less plausible than true news; [9] social media are not the only culprit; [10] and the influence of fake news on large sociopolitical events is overblown. [11]

Because of this disconnect between public discourse and empirical findings, a growing body of research argues that alarmist narratives about misinformation should be understood as a “moral panic.” [12] In particular, these narratives can be characterized as one of the many “technopanics” [13] that have emerged with the rise of digital media. These panics repeat themselves cyclically, [14] and are fueled by a wide range of actors such as the mass media, policymakers, and experts. [15] A well-known example is the broadcast of Orson Welles’ radio drama The War of the Worlds, in 1938, which was quickly followed by alarmist headlines from the newspaper industry claiming that Americans suffered from mass hysteria. Some years later, a psychologist Hadley Cantril gave academic credence to this idea by estimating that a million Americans truly believed in the Martian invasion. [16] Yet, his claim turned out to be empirically unfounded. As Brad Schwartz explains “With the crude statistical tool of the day, there was simply no way of accurately judging how many people heard War of the Worlds, much less how many of them were frightened by it. But all the evidence — the size of the Mercury’s audience, the relatively small number of protest letters, and the lack of reported damage caused by the panic — suggest that few people listened and even fewer believed. Anything else is just guesswork.” [17]

The “Myth of the War of the Worlds Panic” illustrates how academic research can fuel, and legitimize, misconceptions about misinformation. Much research has documented the role played by media and political discourse in building an alarmist narrative on misinformation. [18] Here, we offer a critical review of academic discourse on misinformation, which exploded after the 2016 US presidential election with the publication of more than 2,000 scientific articles. [19] We explain why overgeneralizations of scientific results and poor considerations of methodological limitations can lead to misconceptions about misinformation in the public sphere. Drawing on research questioning the use of big data in social sciences [20] and reception studies, [21] we identify six common misconceptions about misinformation (i.e., statements often found in press articles and scientific studies but not supported by empirical findings). These misconceptions can be divided into two groups: one concerning misconceptions about the prevalence and circulation of misinformation, and one concerning misconceptions about the impact and reception of misinformation. Table 1 offers an overview of the misconceptions tackled in this article. Given that definitions of misinformation vary from one study to another, we use the term in its broadest sense, that is, as an umbrella term encompassing all forms of false or misleading information regardless of the intent behind it.

| Table 1. Frequently Encountered Misconceptions About Misinformation in Column Two, and Misinterpretation of Research Results Associated With These Misconceptions in Column Three. | ||

| Misconceptions about misinformation | Misinterpretations of research results | |

| Prevalence and circulation | 1. Misinformation is just a social media problem | Scientists focus on social media because it is methodologically convenient. Yet, misinformation in legacy media and offline networks is understudied. Social media make visible and quantifiable social phenomena that were previously difficult to observe. |

| 2. The internet is rife with misinformation | Big numbers are the rule on the internet. The misinformation problem should be evaluated at the scale of the information ecosystem, e.g., by including news consumption and news avoidance in the equation. | |

| 3. Falsehoods spread faster than the truth | How (mis)information is defined influences the perceived scale of the problem and the solutions to fight it. Misinformation should not be framed only in terms of accuracy (true vs. false), it could also be framed in terms of harmfulness or ideological slant. | |

| Impact and reception | 4. People believe everything they see on the internet | Prevalence should not be conflated with impact or acceptance. Sharing or liking is not believing. Digital traces do not always mean what we expect them to, and often, to fully understand them, fine-grained analyses are needed. |

| 5. A large number of people are misinformed | Surveys overestimate people’s misperceptions or beliefs in conspiracy theories, and poorly measure rare behaviors. This is due to poor survey practices, but also the instrumental use that some participants make of these surveys (e.g., expressive responding). | |

| 6. Misinformation has a strong influence on people’s behavior | People mostly consume misinformation they are predisposed to accept. Acceptance should not be conflated with attitude or behavioral change. People do not change their minds easily, let alone their behavior. | |

2. Misconceptions About the Prevalence and Circulation of Misinformation

2.1. Misinformation Is Just a Social Media Problem

The internet drastically reduces the cost of accessing, producing, and sharing information. Social media reduces friction even more: the cost of connecting with physically distant minds is lower than ever. The media landscape is no longer controlled by traditional gatekeepers, and misinformation, just like any other content, is easier to publish. Today’s wide access to rich digital traces makes contemporary large-scale issues like misinformation easier to study, which can give the impression that misinformation was less prevalent in the past (compared to reliable information). Alarmist headlines about the effect of social media and new technologies are widespread, such as “How technology disrupted the truth” [22] or “You thought fake news was bad? Deep fakes are where truth goes to die.” [23] Yet, we need to be careful when comparing the big data sets that we have today with the much poorer and smaller data sets of the past. We should also resist the temptation of idealizing the past. There was no golden age in which people only believed and communicated true information. [24] Conspiracy theories spread long before social media. [25] Misinformation, such as false rumors, is a universal feature of human societies, not a modern exception. [26] Before the advent of the internet, in the 70s, rumors about “sex thieves” arose from interactions between strangers in markets and spread throughout Africa. [27] In 1969, rumors about women being abducted and sold as sex slaves proliferated in the French city of Orleans. [28] One cannot assume that misinformation is more common today simply because it is more available and measured.

Social scientists focus on social media — Twitter in particular — because it is methodologically convenient. [29] But active social media users are not representative of the general population, [30] and most U.S. social media users (70%) say they rarely, or never, post about political or social issues (and only 9% say they often do so). [31] Traditional media may play an important role in spreading misinformation, but because of the current focus on social media, little is known about misinformation in widely used sources of information such as television or radio. According to Google Scholar, in May 2022, only seven papers had both “fake news” and “television” in their title, compared to 578 with “fake news” and “social media” (the results are similar with misinformation, 6 vs 389). Television is a gateway to misinformation from elites, most notably from politicians. [32] For instance, Trump’s tweets in his final year of office were on cable news channels for a total of 32h. [33] More broadly, the detrimental consequences of mainstream partisan media, where politicians routinely spread misinformation, are well documented. [34] If political elites or partisan media did not wait for social media to spread misinformation, now they can leverage social media features (e.g., hashtags) to frame topics of discussion in online public debate and to impose their partisan agenda based (sometimes) on outright lies.

These limitations invite more nuanced views on the role of social media in the misinformation problem and suggest that more work is needed on misinformation in legacy media and offline networks.

2.2. The Internet is Rife With Misinformation

During the 2016 US presidential campaign, the top 20 fake news stories on Facebook accumulated nearly nine million shares, reactions, and comments, between August 1 and November 8. [35] But what do nine million interactions mean on Facebook? If the 1.5 billion Facebook users in 2016 had commented, reacted, or shared just one piece of content per week, the nine million engagements with the top fake news stories would represent only 0.042% of all their actions during the period studied. [36]

According to one estimate, in the US, between 2019 and 2020, traffic to untrustworthy websites increased by 70%, whereas traffic to trustworthy websites increased by 47%, [37] which led to the following headline: “Covid-19 and the rise of misinformation and misunderstanding.” [38] Yet, traffic to trustworthy websites is one order of magnitude higher than the traffic to untrustworthy websites. In March 2020, untrustworthy websites received 30 million additional views compared to March 2019, whereas traffic to trustworthy websites increased by two billion. [39]

These two examples highlight the need to zoom out of the big numbers surrounding misinformation and to put them in perspective with people’s information practices. The average US internet user spends less than 10 min a day consuming news online [40] and the average French internet user spends less than 5 min. [41] News consumption represents only 14% of Americans’ media diet and 3% of the time French people spend on the internet. [42] Where is misinformation in all that? It represents 0.15% of the American media diet and 0.16% of the French. [43] In addition, misinformation consumption is heavily skewed: 61% of the French participants did not consult any unreliable sources during the 30 days of the study, [44] and a small minority of people account for most of the misinformation consumed and shared online. [45]

Just as the internet is not rife with news, the internet is not rife with misinformation. When people want to inform themselves, they primarily access established news websites, [46] or, more commonly, turn on the TV. [47] Misinformation receives little online attention compared to reliable news, and, in turn, reliable news receives little online attention compared to everything else that people do. [48] People do not use the internet primarily to be informed, but rather to connect with friends, shop, work, and more generally for entertainment. [49] Documenting the large volume of interactions generated by misinformation is important, but it should not be taken as proof of its predominance. Big numbers are the rule on the internet, and to be properly understood, they must be contextualized within the entire information ecosystem.

That being said, it is not always possible for researchers to consider the big picture since access to social media data is restricted and often partial. As a result, many research questions remain unexplored and conclusions are sometimes uncertain, even when private companies provide access to huge amounts of data. For instance, despite its unprecedented size, the URLs dataset released by Facebook, via Social Science One’s program, only includes URLs that have been publicly shared at least 100 times. This threshold of 100 public shares overestimates by a factor of four the prevalence of misinformation on Facebook. [50] Despite our limited access to social media data, methodological improvements are possible. For example, even with partial data access, it is possible to conduct cross-platform studies, which are needed to obtain accurate estimates of misinformation prevalence and to quantify the diversity of people’s news diets. [51]

2.3. Falsehoods Spread Faster than the Truth

The idea that falsehoods spread faster than the truth was amplified and legitimized by an influential article published in Science which found, among others, that “it took the truth about six times as long as falsehood to reach 1,500 people.” [52] The study was quickly covered in prominent news outlets and led to alarmist headlines such as “Want something to go viral? Make it fake news.” [53] Yet, Vosoughi and his colleagues did not examine the spread of true and false news online but of “contested news” that fact-checkers classified as either true or false, leaving aside a large number of uncontested news that is extremely viral. For instance, the royal wedding between Prince Harry and Meghan Markle attracted 29 million viewers in the United States and generated at least 6.9 million interactions on Facebook. [54] Similarly, the picture of Lionel Messi holding the 2022 world cup trophy generated 75 million likes on Instagram (and more than 2 million comments) — beating the record of likes on Instagram previously held by the photo of an egg with 59 million likes. The authors themselves publicly explained this sampling bias. [55] Yet, journalists and scientists quickly overgeneralized, and nuances were lost. Subsequent studies found conflicting results, with science-based evidence and fact-checking being more retweeted than false information during the COVID-19 pandemic [56] and news from hyperpartisan sites not disseminating more quickly than mainstream news on Australian Twitter. [57] Moreover, a cross-platform study found no difference in the spreading pattern of news from reliable and questionable sources. [58] This is not to say that the Vosoughi et al. findings on fact-checked news do not hold, but rather that how information and misinformation are defined greatly influences the conclusions we draw.

Misinterpretations of that article raise the following questions: What categories should we use? Do dichotomous measures of veracity make sense? How should we define misinformation? These past few years, important efforts have been made to refine the blurred concept of “fake news.” [59] In practice, though, researchers use the ready-made categories constructed by fact-checkers, putting under the same heading content that differs in terms of harmfulness or facticity.

How (mis)information is defined influences the perceived scale of the problem and the solutions to fight it. [60] For instance, in 2016, a widely circulated BuzzFeed article showed that fake news outperformed true news on Facebook in terms of engagement before the U.S. presidential election. [61] However, when excluding hyperpartisan news (such as Fox News) from the fake news category, reliable news largely outperformed fake news. [62] Politically biased information that is not false could have harmful effects, [63] but does it belong in the misinformation category? As Rogers explains, “stricter definitions of misinformation (imposter sites, pseudo-science, conspiracy, extremism only) lessen the scale of the problem, while roomier ones (adding “hyperpartisan” and “junk” sites serving clickbait) increase it, albeit rarely to the point where it outperforms non-problematic (or more colloquially, mainstream) media.” [64] Of course, it would be impossible to find a unanimous typology that would put every piece of news in the “right” category, but it is important to be mindful of the limitations of the categories we use.

In the first part of this article, we have highlighted three misconceptions about the prevalence and circulation of misinformation. We encourage future research to broaden the scope of misinformation studies by (1) putting misinformation consumption into perspective with broader people’s information practices, as well as (2) all their online activities, and (3) moving away from blatantly false information to study subtler forms of information disorders.

Figure 1. Contextualizing misinformation prevalence and circulation. From the innermost circle outward: fact-checked false news; broader forms of misinformation, including, e.g., partisan interpretations; all online information; all information, including offline.

3. Misconceptions About the Impact and Reception of Misinformation

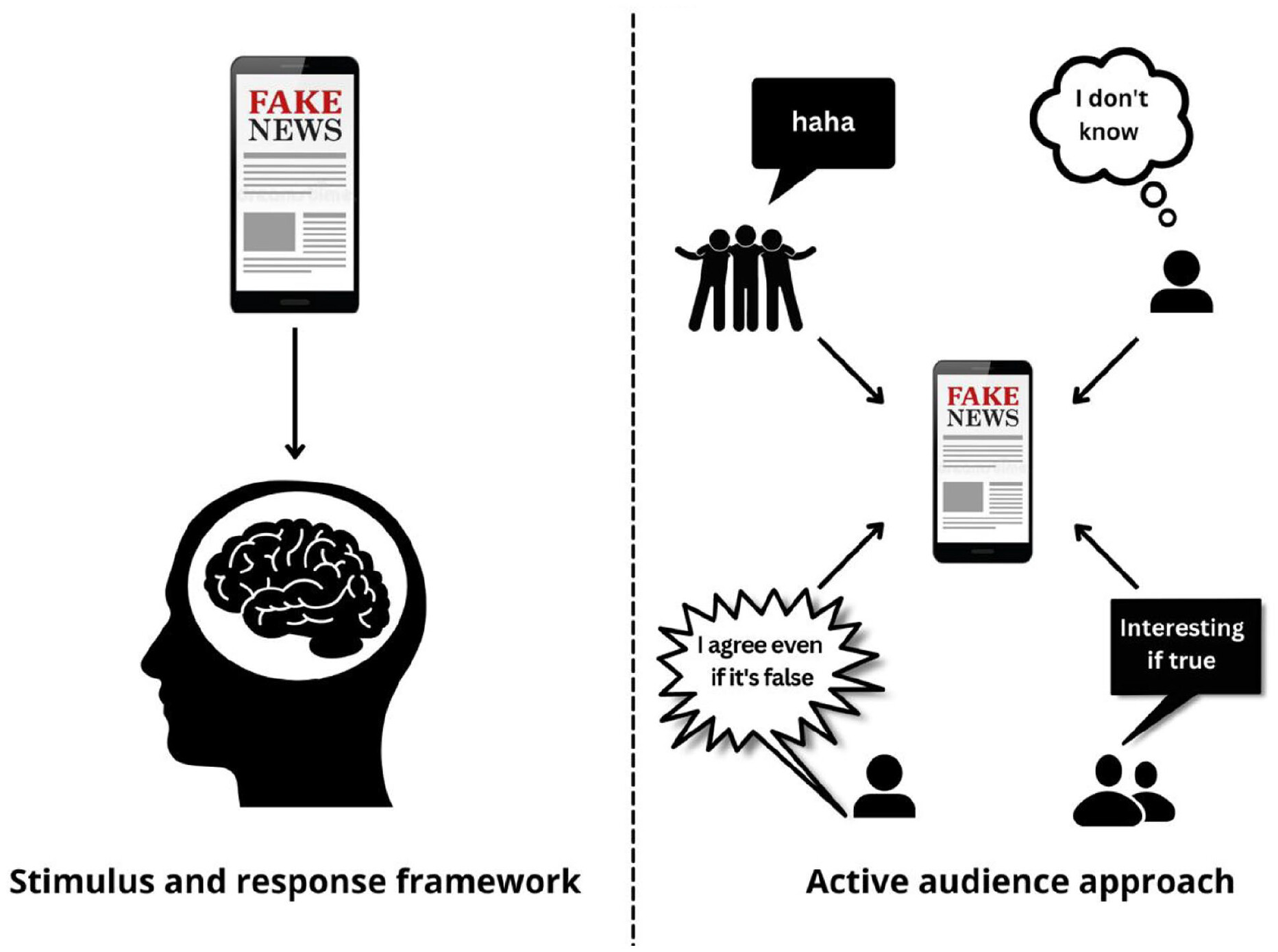

In the second part of this article, we examine misconceptions about the impact and reception of misinformation. It is not because the numbers generated by misinformation are small compared to reliable information or non-news content that we can dismiss them. Small numbers can make a difference at a societal level, but to accurately assess their impact and how they translate into attitudes and behaviors, we need to understand what they mean. Until now, studies on misinformation have been dominated by experimental approaches, self-report surveys, and big data methods reducing the reception of misinformation to mere stimulus and response mechanisms, while failing to capture audience agency and contexts. Drawing on an active audience approach, the following sections explain why certain findings from quantitative studies can lead to misconceptions about the impact and reception of misinformation (see Figure 2).

Figure 2. Contextualizing misinformation effect and reception.

3.1. People Believe Everything They See on the Internet

Media effects are increasingly measured via big data methods [65] neglecting the agency of people and paying little attention to communicative context. [66] As Danah Boyd and Kate Crawford put it, “why people do things, write things, or make things can be lost in the sheer volume of numbers.” [67] For instance, it is tempting to conflate prevalence with impact, but the diffusion of inaccurate information should be distinguished from its reception. People are more likely to share true and false news they perceive as more accurate, [68] but there is also a well-documented disconnect between sharing intentions and accuracy judgments. [69] Sharing and liking are not believing. People interact with misinformation for a variety of reasons: to socialize, to express skepticism, outrage or anger, to signal group membership, or simply to have a good laugh. [70] Engagement metrics are an imperfect proxy of information reception and are not sufficient to estimate the impact of misinformation. As Wagner and Boczkowski [71] note, “studies that measure exposure to fake news seem sometimes to easily infer acceptance of content due to mere consumption of it, therefore perhaps exaggerating potential negative effects […] future studies should methodologically account for critical or ironic news sharing, as well as other forms of negotiation and resignification of false content.”

With the datafication of society, scientists’ attention shifted from empirical audiences to the digital traces that they leave. However, we should not forget the people who left these traces in the digital record, nor neglect the rich theoretical framework produced by decades of reception studies based on in situ observations of people consuming media content. [72] People are not passive receptacles of information. They are active, interpretative, and domesticate technologies in complex and unexpected ways. [73] People are more skeptical than gullible when browsing online. [74] Trust in the media is low, but trust in information encountered on social media is even lower. And people deploy a variety of strategies to detect and counter misinformation, such as checking different sources or turning to fact-checks. [75] People often disqualified as “irrational” or “gullible” in public discourse, such as “conspiracy theorists” or “anti-vaxxers,” deploy sophisticated verification strategies to “fact-check” the news in their own way [76] and produce “objectivist counter-expertise” [77] by doing their “own research.” [78] For instance, a recent in-depth analysis of around 15,000 comments, based on mix-methods, showed how “anti-vaxxers” cite scientific studies on Facebook groups to support their positions and challenge the objectivity of mainstream media. [79]

Given people’s skepticism toward information encountered online and the low prevalence of misinformation in their media diet, interventions aimed at reducing the acceptance of misinformation are bound to have smaller effects than interventions increasing trust in reliable sources of information. [80] More broadly, enhancing trust in reliable sources should be a priority over fostering distrust in unreliable sources. [81]

3.2. A Large Number of People are Misinformed

Headlines about the ubiquity of misbeliefs are rampant in the media and are most often based on surveys. But how well do surveys measure misbeliefs? Luskin and colleagues [82] analyzed the design of 180 media surveys with closed-ended questions measuring belief in misinformation. They found that more than 90% of these surveys lacked an explicit “Don’t know” or “Not sure” option and used formulations encouraging guessing such as “As far as you know …,” or “Would you say that …” Often, participants answer these questions by guessing the correct answer and report holding beliefs that they did not hold before the survey. [83] Not providing, or not encouraging “Don’t know” answers is known to increase guessing even more. [84] Guessing would not be a major issue if it only added noise to the data. To find out, Luskin and colleagues [85] tested the impact of not providing “Don’t know” answers and encouraging guessing on the prevalence of misbeliefs. They found that it overestimates the proportion of incorrect answers by nine percentage points (25 to 16), and, when considering only people who report being confident in holding a misperception, it overestimates incorrect answers by 20 percentage points (25 to 5). In short, survey items measuring misinformation overestimate the extent to which people are misinformed, eclipsing the share of those who are simply uninformed.

In the same vein, conspiratorial beliefs are notoriously difficult to measure and surveys tend to exaggerate their prevalence. [86] For instance, participants in survey experiments display a preference for positive response options (yes vs no, or agree vs disagree) which inflates agreement with statements, including conspiracy theories, by up to 50%. [87] Moreover, the absence of “Don’t know” options, together with the impossibility to express one’s preference for conventional explanations in comparison to conspiratorial explanations, greatly overestimate the prevalence of conspiratorial beliefs. [88] These methodological problems contributed to unsupported alarmist narratives about the prevalence of conspiracy theories, such as Qanon going mainstream. [89]

Moreover, the misperceptions that surveys measure are skewed toward politically controversial and polarizing misperceptions, which are not representative of the misperceptions that people actually hold. [90] This could contribute to fueling affective polarization by emphasizing differences between groups instead of similarities and inflate the prevalence of misbeliefs. When misperceptions become group markers, participants use them to signal group membership — whether they truly believe the misperceptions or not. [91] Responses to factual questions in survey experiments are known to be vulnerable to “partisan cheerleading,” [92] in which, instead of stating their true beliefs, participants give politically congenial responses. Quite famously, a large share of Americans believed that Donald Trump’s inauguration in 2017 was more crowded than Barack Obama’s in 2009, despite being presented with visual evidence to the contrary. Partisanship does not directly influence people’s perceptions: misperceptions about the size of the crowds were largely driven by expressive responding and guessing. Respondents who supported President Trump “intentionally provide misinformation” to reaffirm their partisan identity. [93] The extent to which expressive responding contributes to the overestimation of other political misbeliefs is debated, [94] but it is probably significant.

Solutions have been proposed to overcome these flaws and measure misbeliefs more accurately, such as including confidence-in-knowledge measures [95]

and considering only participants who firmly and confidently say they believe misinformation items as misinformed. [96]

Yet, even when people report confidently holding misbeliefs, these misbeliefs are highly unstable across time, much more so than beliefs. [97]

For instance, the responses of people saying they are 100% certain that climate change is not occurring have the same measurement properties as responses of people saying they are 100% certain the continents are not moving or that the sun goes around the Earth. [98]

A participant’s response at time T does not predict their answer at time T + 1.

In other words, flipping a coin would give a similar response pattern.

So far, we have seen that even well-designed surveys overestimate the prevalence of misbeliefs. A further issue is that surveys unreliably measure exposure to misinformation and the occurrence of rare events such as fake news exposure. People report being exposed to a substantial amount of misinformation and recall having been exposed to particular fake headlines. [99] To estimate the reliability of these measures, Allcott and Gentzkow showed participants the 14 most popular fake news during the American election campaign, together with 14 made-up “placebo fake news.” 15% of participants declared having been exposed to one of the 14 “real fake news,” but 14% also declared having been exposed to one of the 14 “fake news placebos.”

During the pandemic, many people supposedly engaged in extremely dangerous hygiene practices to fight COVID-19 because of misinformation encountered on social media, such as drinking diluted bleach. [100] This led to headlines such as “COVID-19 disinformation killed thousands of people, according to a study.” [101] Yet, the study is silent regarding causality, and cannot be taken as evidence that misinformation had a causal impact on people’s behavior. [102] For instance, 39% of Americans reported having engaged in at least one cleaning practice not recommended by the CDC, 4% of Americans reported drinking or gargling a household disinfectant, while another 4% reported drinking or gargling diluted bleach. [103] These percentages should not be taken at face value. A replication of the survey found that these worrying responses are entirely attributable to problematic respondents who also reported “recently having had a fatal heart attack” or “eating concrete for its iron content” at a rate similar to that of ingesting household cleaners. [104] The authors conclude that “Once inattentive, mischievous, and careless respondents are taken out of the analytic sample we find no evidence that people ingest cleansers to prevent Covid-19 infection.” [105] This is not to say that COVID-19 misinformation had no harmful effects (such as creating confusion or eroding trust in reliable information), but rather that surveys using self-reported measures of rare and dangerous behaviors should be interpreted with caution.

3.3. Misinformation Has a Strong Influence on People’s Behavior

Sometimes, people believe what they see on the internet and engagement metrics do translate into belief. Yet, even when misinformation is believed, it does not necessarily mean that it changed anyone’s mind or behavior. First, people largely consume politically congenial misinformation. [106] That is, they consume misinformation they already agree with, or are predisposed to accept. Congenial misinformation “preaches to the choir” and is unlikely to have drastic effects beyond reinforcing previously held beliefs. Second, even when misinformation changes people’s minds and leads to the formation of new (mis)beliefs, it is not clear if these (mis)beliefs ever translate into behaviors. Attitudes are only weak predictors of behaviors. This problem is well known in public policies as the value-action gap. [107] Most notoriously, people report being increasingly concerned about the environment without adjusting their behaviors accordingly. [108]

Common misbeliefs, such as conspiracy theories, are likely to be cognitively held in such a way that limits their influence on behaviors. [109] For instance, the behavioral consequences that follow from common misbeliefs are often at odds with what we would expect from people actually believing them. As Jonathan Kay [110] noted, “one of the great ironies of the Truth movement is that its activists typically hold their meetings in large, unsecured locations such as college auditoriums — even as they insist that government agents will stop at nothing to protect their conspiracy for world domination from discovery.” Often, these misbeliefs are likely to be post hoc rationalizations of pre-existing attitudes, such as distrust of institutions.

In the real world, it is difficult to measure how much attitude change misinformation causes, and it is a daunting task to assess its impact on people’s behavior. Surveys relying on correlational data tell us little about causation. For example, belief in conspiracy theories is associated with many costly behaviors, such as COVID-19 vaccine refusal. [111] Does this mean that vaccine hesitancy is caused by conspiracy theories? No, it could be that both vaccine hesitancy and belief in conspiracy theories are caused by other factors, such as low trust in institutions. [112] A few ingenious studies allowed some causal inferences to be drawn. For instance, Kim and Kim used a longitudinal survey to capture people’s beliefs and behaviors both before and after the diffusion of the “Obama is a Muslim” rumor. They found that after the rumor spread, more people were likely to believe that Obama was a Muslim. Yet, this effect was “driven almost entirely by those predisposed to dislike Obama,” [113] and the diffusion of the rumor had no measurable effect on people’s intention to vote for Obama. This should not come as a surprise, considering that even political campaigns and political advertising only have weak and indirect effects on voters. [114] As David Karpf writes “Generating social media interactions is easy; mobilizing activists and persuading voters is hard.” [115]

The idea that exposure to misinformation (or information) has a strong and direct influence on people’s attitudes and behaviors comes from a misleading analogy of social influence according to which ideas infect human minds like viruses infect human bodies. Americans did not vote for Trump in 2016 because they were brainwashed. There is no such thing as “brainwashing.” [116] Information is not passed from brain to brain like a virus is passed from body to body. When humans communicate, they constantly reinterpret the messages they receive, and modify the ones they send. [117] The same tweet will create very different mental representations in each brain that reads it, and the public representations people leave behind them, in the form of digital traces, are only an imperfect proxy of their private mental representations. The virus metaphor, all too popular during the COVID-19 pandemic — think of the “infodemic” epithet — is misleading. [118] It is reminiscent of outdated models of communication (e.g., “hypodermic needle model”) assuming that audiences were passive and easily swayed by pretty much everything they heard or read. [119] As Anderson notes “we might see the role of Facebook and other social media platforms as returning us to a pre-Katz and Lazarsfeld era, with fears that Facebook is ‘radicalizing the world’ and that Russian bots are injecting disinformation directly in the bloodstream of the polity.” [120] These premises are at odds with what we know about human psychology and clash with decades of data from communication studies.

4. Conclusion

We identified six misconceptions about the prevalence and impact of misinformation and examined the conceptual and methodological challenges they raise. First, social media makes the perfect villain; but before blaming it for the misinformation problem, more work needs to be done on legacy media and offline networks. Second, the misinformation problem should be evaluated at the scale of the information ecosystem, for example, by including news consumption and news avoidance in the equation. Third, we should be mindful of the categories that we use, like “fake” versus “true” news since they influence our results and their practical implications. Fourth, more qualitative and quantitative reception studies are needed to understand people’s informational practices. Digital traces do not always mean what we expect them to, and often, to fully understand them, fine-grained analyses are needed. Fifth, the quantity of misinformed people is likely to be overestimated. Surveys measuring misbeliefs should include “Don’t know” or “Not sure” options and avoid wording that encourages guessing. Sixth, we should resist monocausal explanations and blaming misinformation for complex socioeconomic problems. People mostly consume information they are predisposed to accept; this acceptance should not be conflated with attitude or behavioral change. More broadly, conclusions drawn from engagement metrics, online experiments, or surveys need to be taken with a grain of salt, as they tend to overestimate the prevalence of misbeliefs, and tell us little about the causal influence of misinformation and its reception. In the lines below, we detail practical avenues for future research to overcome some of these challenges.

We need to move away from fake news and blatantly false information as their prevalence and effects are likely minimal. Instead, we should investigate subtler forms of misinformation that produce biased perceptions of reality. For instance, partisan media use true information in misleading ways by selectively reporting on some issues but not others and framing them in a politically congruent manner. [121]

We need to better interpret data collected via computational methods or self-report surveys. So far, misinformation studies have been dominated by big data methods or experimental approaches. This research could benefit from being completed by active audience research. [122] For instance, instead of focusing on media effects where participants are often implicitly depicted as passive, it would be more fruitful to study the different ways people use the information they consume online. Similarly, digital ethnographies are needed to understand the reception of misinformation in our complex digital ecosystem.

We need to rely on more complex models of influence. Metaphors like “contagion” and “infodemic” provide an overly simplified — and incorrect — view of how influence works. [123] People do not change their minds when exposed to “fake news” on social media. Social media does not turn people into conspiracy theorists. Individual predispositions, such as conspiracy mentality, mediate the relationship between social media use and belief in conspiracy theories. [124] These mediating variables, which explain for instance why some people but not others visit untrustworthy websites, should be investigated further.

We need to ask better research questions. The misconceptions outlined above (mis)guided some research agenda by focusing excessively on why people “fall for fake news” or on how to make people more skeptical. Yet, most people do not fall for fake news and are (overly) skeptical of news on social media. This excessive focus on biases, common in psychology, left some pressing questions unanswered. For example, we know very little about the cognitive and social tools that people use to verify information online. Future studies should move from a perspective centered on the identification of isolated failures of individual cognition to an approach attentive to audience critical skills. Our digital environment empowered us with resources such as fact-checking websites, search engines and Wikipedia, making it easier than ever before to verify content encountered online and offline. Yet, research on the uses (and misuses) of these new information search and verification tools is drastically lacking.

The study of misconceptions does not entail a focus on misinformation. People commonly hold false beliefs and engage in detrimental behaviors because they do not trust institutions and choose not to inform themselves. Instead of focusing on the small number of people who consume news from unreliable sources, it would be more fruitful to focus on the large share of people who are overly skeptical of reliable sources and rarely consume any news. [125] Despite legitimate concerns about misinformation, people are more likely to be uninformed than misinformed, [126] even during the COVID-19 pandemic. [127]

To appropriately understand and fight misinformation, it is crucial to have these conceptual and methodological blind spots in mind. Just like misinformation, misinformation on misinformation could have deleterious effects, [128] such as diverting society’s attention and resources from deeper socioeconomic issues or fueling people’s mistrust of the media even more. [129] Misinformation is mostly a symptom of deeper sociopolitical problems rather than a cause of these problems. [130] Fighting the symptoms can help, but it should not divert us from the real causes, nor overshadow the need to fight for access to accurate, transparent, and quality information.

Acknowledgments: We would like to thank Dominique Cardon, Héloïse Théro, and Shaden Shabayek for proofreading our manuscript and for their precious feedbacks and corrections.

References

- Acerbi A. (2020). Cultural evolution in the digital age. Oxford University Press.

- Acerbi A., Altay S., Mercier H. (2022). Research note: Fighting misinformation or fighting for information? Harvard Kennedy School (HKS) Misinformation Review. [web]

- Alexander S. (2013). Lizardman’s constant is 4%. Slate Star Codex.

- Allcott H., Gentzkow M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. [web]

- Allen J., Howland B., Mobius M., Rothschild D., Watts D. J. (2020). Evaluating the fake news problem at the scale of the information ecosystem. Science Advances, 6(14), eaay3539. [web]

- Allen J., Mobius M., Rothschild D. M., Watts D. J. (2021). Research note: Examining potential bias in large-scale censored data. Harvard Kennedy School Misinformation Review. [web]

- Altay S. (2022). How effective are interventions against misinformation? [web]

- Altay S., Acerbi A. (2022). Misinformation is a threat because (other) people are gullible. [web]

- Altay S., de Araujo E., Mercier H. (2021). “If this account is true, it is most enormously wonderful”: Interestingness-if-true and the sharing of true and false news. Digital Journalism, 10, 373–394. [web]

- Altay S., Hacquin A.-S., Mercier H. (2020). Why do so few people share fake news? It hurts their reputation. New Media & Society, 24, 1303–1324. [web]

- Altay S., Nielsen R. K., Fletcher R. (2022). Quantifying the “infodemic”: People turned to trustworthy news outlets during the 2020 coronavirus pandemic. Journal of Quantitative Description: Digital Media, 2. [web]

- Anderson C. W. (2021). Fake news is not a virus: On platforms and their effects. Communication Theory, 31(1), 42–61. [web]

- Athique A. (2018). The dynamics and potentials of big data for audience research. Media, Culture & Society, 40(1), 59–74. [web]

- Auxier B. (2020). 64% of Americans say social media have a mostly negative effect on the way things are going in the U.S. today. Pew Research Center. [web]

- Benkler Y., Faris R., Roberts H. (2018). Network propaganda: Manipulation, disinformation, and radicalization in American politics. Oxford University Press.

- Bennett W. L., Livingston S. (Eds.). (2020). The disinformation age: Politics, technology, and disruptive communication in the United States. Cambridge University Press. [web]

- Berger S. (2018). Here’s how many Americans watched Prince Harry and Meghan Markle’s royal wedding. CNBC. [web]

- Berriche M. (2021). In search of sources. Politiques de Communication, 1, 115–154. [web]

- Berriche M., Altay S. (2020). Internet users engage more with phatic posts than with health misinformation on Facebook. Palgrave Communications, 6, 71. [web]

- Bonhomme J. (2016). The Sex Thieves. The Anthropology of a Rumor. Média Diffusion.

- Boyd D., Crawford K. (2012). Critical questions for big data: Provocations for a cultural, technological, and scholarly phenomenon. Information, Communication & Society, 15(5), 662–679.

- Broockman D., Kalla J. (2022). The manifold effects of partisan media on viewers’ beliefs and attitudes: A field experiment with Fox News viewers. OSF Preprints, 1. [web]

- Bruns A., Keller T. (2020). News diffusion on Twitter: Comparing the dissemination careers for mainstream and marginal news. Social Media & Society. [web]

- Bullock J. G., Gerber A. S., Hill S. J., Huber G. A. (2013). Partisan bias in factual beliefs about politics. National Bureau of Economic Research. [web]

- BuzzFeed. (2016). Here are 50 of the biggest fake news hits on Facebook from 2016. [web]

- Cantril H., Gaudet H., Herzog H. (1940). The invasion from Mars. Princeton University Press.

- Carlson M. (2020). Fake news as an informational moral panic: The symbolic deviancy of social media during the 2016 US presidential election. Information, Communication & Society, 23(3), 374–388.

- Cinelli M., Quattrociocchi W., Galeazzi A., Valensise C. M., Brugnoli E., Schmidt A. L., Zola P., Zollo F., Scala A. (2020). The COVID-19 social media infodemic. Scientific Reports, 10(1), 1–10.

- Claidière N., Scott-Phillips T. C., Sperber D. (2014). How Darwinian is cultural evolution? Philosophical Transactions of the Royal Society B: Biological Sciences, 369(1642), 20130368. [web]

- Clifford S., Kim Y., Sullivan B. W. (2019). An improved question format for measuring conspiracy beliefs. Public Opinion Quarterly, 83(4), 690–722.

- Cohen S. (1972). Folk devils and moral panics. Routledge. [web]

- Cointet J.-P., Cardon D., Mogoutov A., Ooghe-Tabanou B., Plique G., Morales P. (2021). Uncovering the structure of the French media ecosystem. [web]

- Cordonier L., Brest A. (2021). How do the French inform themselves on the Internet? Analysis of online information and disinformation behaviors. Fondation Descartes. [web]

- Cushion S., Soo N., Kyriakidou M., Morani M. (2020). Research suggests UK public can spot fake news about COVID-19, but don’t realise the UK’s death toll is far higher than in many other countries. LSE COVID- Blog. [web]

- Fletcher R., Cornia A., Graves L., Nielsen R. K. (2018). Measuring the reach of “fake news” and online disinformation in Europe. Reuters Institute Factsheet. [web]

- Fletcher R., Nielsen R. K. (2019). Generalised scepticism: How people navigate news on social media. Information, Communication & Society, 22(12), 1751–1769.

- Fox M. (2018). Fake News: Lies spread faster on social media than truth does. NBC News. [web]

- France info. (2020). Désintox. Non, la désinformation n’a pas fait 800 morts. France Info. [web]

- Gharpure R., Hunter C. M., Schnall A. H., Barrett C. E., Kirby A. E., Kunz J., Berling K., Mercante J. W., Murphy J. L., Garcia-Williams A. G. (2020). Knowledge and practices regarding safe household cleaning and disinfection for COVID-19 prevention—United States, May 2020. Morbidity and Mortality Weekly Report, 69(23), 705–709.

- Graham M. H. (2020). Self-awareness of political knowledge. Political Behavior, 42(1), 305–326.

- Graham M. H. (2021). Measuring misperceptions? American Political Science Review.

- Grinberg N., Joseph K., Friedland L., Swire-Thompson B., Lazer D. (2019). Fake news on twitter during the 2016 US Presidential election. Science, 363(6425), 374–378. [web]

- Guess A., Aslett K., Tucker J., Bonneau R., Nagler J. (2021). Cracking open the news feed: Exploring what us Facebook users see and share with large-scale platform data. Journal of Quantitative Description: Digital Media, 1. [web]

- Guess A., Lockett D., Lyons B., Montgomery J. M., Nyhan B., Reifler J. (2020). “Fake news” may have limited effects beyond increasing beliefs in false claims. Harvard Kennedy School Misinformation Review, 1(1). [web]

- Guess A., Nagler J., Tucker J. (2019). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances, 5(1), eaau4586. [web]

- Hill S. J., Roberts M. E. (2021). Acquiescence bias inflates estimates of conspiratorial beliefs and political misperceptions. [web]

- Islam M. S., Sarkar T., Khan S. H., Kamal A.-H. M., Hasan S. M., Kabir A., Yeasmin D., Islam M. A., Chowdhury K. I. A., Anwar K. S. (2020). COVID-19–related infodemic and its impact on public health: A global social media analysis. The American Journal of Tropical Medicine and Hygiene, 103(4), 1621.

- Jungherr A., Schroeder R. (2021). Disinformation and the structural transformations of the public arena: Addressing the actual challenges to democracy. Social Media+ Society, 7(1), 2056305121988928.

- Kalla J. L., Broockman D. E. (2018). The minimal persuasive effects of campaign contact in general elections: Evidence from 49 field experiments. American Political Science Review, 112(1), 148–166. [web]

- Karpf D. (2019). On digital disinformation and democratic myths. Mediawell (Social Science Research Council).

- Kay J. (2011). Among the Truthers: A journey through America’s growing conspiracist underground. Harper Collins.

- Kim J. W., Kim E. (2019). Identifying the effect of political rumor diffusion using variations in survey timing. Quarterly Journal of Political Science, 14(3), 293–311. [web]

- Kitchin R. (2014). Big Data, new epistemologies and paradigm shifts. Big Data & Society, 1(1), 2053951714528481. [web]

- Kollmuss A., Agyeman J. (2002). Mind the gap: Why do people act environmentally and what are the barriers to pro-environmental behavior? Environmental Education Research, 8(3), 239–260.

- Krosnick J. A. (ed) (2018). Questionnaire design. In The Palgrave handbook of survey research (pp. 439-455). Palgrave Macmillan.

- Landry N., Gifford R., Milfont T. L., Weeks A., Arnocky S. (2018). Learned helplessness moderates the relationship between environmental concern and behavior. Journal of Environmental Psychology, 55, 18–22.

- Lasswell H. D. (1927). The theory of political propaganda. The American Political Science Review, 21(3), 627–631. [web]

- Li J., Wagner M. W. (2020). The value of not knowing: Partisan cue-taking and belief updating of the uninformed, the ambiguous, and the misinformed. Journal of Communication, 70(5), 646–669.

- Litman L., Rosen Z., Ronsezweig C., Weinberger S. L., Moss A. J., Robinson J. (2020). Did people really drink bleach to prevent COVID-19? A tale of problematic respondents and a guide for measuring rare events in survey data. Medrxiv. [web]

- Livingstone S. (2019). Audiences in an age of datafication: Critical questions for media research. [web]

- Longhi J. (2021). Mapping information and identifying disinformation based on digital humanities methods: From accuracy to plasticity. Digital Scholarship in the Humanities, 36, 980–998. [web]

- Lull J. (1988). World families watch television. SAGE.

- Luskin R. C., Bullock J. G. (2011). “Don’t know” means “don’t know”: DK responses and the public’s level of political knowledge. The Journal of Politics, 73(2), 547–557.

- Luskin R. C., Sood G., Park Y. M., Blank J. (2018). Misinformation about misinformation? Of headlines and survey design. Unpublished Manuscript. [web]

- Majid A. (2021). Covid-19 and the rise of misinformation and misunderstanding. PressGazette. [web]

- Marwick A. E., Partin W. C. (2022). Constructing alternative facts: Populist expertise and the QAnon conspiracy. New Media & Society. Advance online publication. [web]

- Marwick A. E. (2008). To catch a predator? The MySpace moral panic. First Monday, 13(6). [web]

- McClain C. (2021). 70% of U.S. social media users never or rarely post or share about political, social issues. [web]

- Mellon J., Prosser C. (2017). Twitter and Facebook are not representative of the general population: Political attitudes and demographics of British social media users. Research & Politics, 4(3), 2053168017720008. [web]

- Mercier H. (2020). Not born yesterday: The science of who we trust and what we believe. Princeton University Press.

- Mercier H., Altay S. (2022). Do cultural misbeliefs cause costly behavior? In Musolino J., Hemmer P., Sommer J. (Eds.), The science of beliefs. [web]

- Metzger M. J., Flanagin A. J., Mena P., Jiang S., Wilson C. (2021). From dark to light: The many shades of sharing misinformation online. Media and Communication, 9, 3409.

- Miró-Llinares F., Aguerri J. C. (2021). Misinformation about fake news: A systematic critical review of empirical studies on the phenomenon and its status as a “threat.” European Journal of Criminology. Advance online publication. [web]

- Mitchell A., Gottfried J., Stocking G., Walker M., Fedeli S. (2019). Many Americans say made-up news is a critical problem that needs to be fixed. Pew Research Center’s Journalism Project. [web]

- Mitchelstein E., Matassi M., Boczkowski P. J. (2020). Minimal effects, maximum panic: Social media and democracy in Latin America. Social Media + Society, 6(4), 2056305120984452. [web]

- Morin E. (1969). The rumor of Orleans. Le Seuil.

- Morley D. (1980). The nationwide audience. British Film Institute.

- Newman N., Fletcher R., Schulz A., Andı S., Nielsen R. K. (2020). Reuters institute digital news report 2020. [web]

- Nyhan B. (2020). Facts and myths about misperceptions. Journal of Economic Perspectives, 34(3), 220–236.

- Orben A. (2020). The Sisyphean cycle of technology panics. Perspectives on Psychological Science, 15(5), 1143–1157. [web]

- Osmundsen M., Bor A., Vahlstrup P. B., Bechmann A., Petersen M. B. (2021). Partisan polarization is the primary psychological motivation behind political fake news sharing on Twitter. American Political Science Review, 115, 999–1015. [web]

- Paris Match Belgique. (2020). Misinformation about coronavirus responsible for thousands of deaths, study finds.

- Prior M., Sood G., Khanna K. (2015). You cannot be serious: The impact of accuracy incentives on partisan bias in reports of economic perceptions. Quarterly Journal of Political Science, 10(4), 489–518.

- Pulido C. M., Villarejo-Carballido B., Redondo-Sama G., Gómez A. (2020). COVID-19 infodemic: More retweets for science-based information on coronavirus than for false information. International Sociology, 35(4), 377–392.

- Ramaciotti Morales P., Berriche M., Cointet J.-P. (2022). The geometry of misinformation: Embedding Twitter networks of users who spread fake news in geometrical opinion spaces. [web]

- Rogers R. (2020). The scale of Facebook’s problem depends upon how “fake news” is classified. Harvard Kennedy School Misinformation Review, 1, 1–15.

- Rogers R. (2021). Marginalizing the mainstream: How social media privilege political information. Frontiers in Big Data. Advance online publication. [web]

- Schaffner B. F., Luks S. (2018). Misinformation or expressive responding? What an inauguration crowd can tell us about the source of political misinformation in surveys. Public Opinion Quarterly, 82(1), 135–147.

- Schwartz A. B. (2015). Broadcast hysteria: Orson Welles’s War of the Worlds and the art of fake news. Macmillan.

- Schwartz O. (2018, November 12). You thought fake news was bad? Deep fakes are where truth goes to die. The Guardian. [web]

- Silverman C. (2016, November 16). This analysis shows how viral fake election news stories outperformed real news on Facebook. Buzzfeed News. [web]

- Simon F. M., Camargo C. (2021). Autopsy of a metaphor: The origins, use, and blind spots of the “infodemic.” New Media & Society. Advance online publication. [web]

- Tandoc E. C.Jr, Lim Z. W., Ling R. (2018). Defining “fake news”: A typology of scholarly definitions. Digital Journalism, 6(2), 137–153.

- Tripodi F. (2018). Searching for alternative facts (p. 64). Data & Society.

- Tsfati Y., Boomgaarden H., Strömbäck J., Vliegenthart R., Damstra A., Lindgren E. (2020). Causes and consequences of mainstream media dissemination of fake news: Literature review and synthesis. Annals of the International Communication Association, 1–17.

- Tufekci Z. (2014). Big questions for social media big data: Representativeness, validity and other methodological pitfalls. Eighth International AAAI Conference on Weblogs and Social Media, 10. [web]

- Uscinski J., Enders A., Klofstad C., Seelig M., Drochon H., Premaratne K., Murthi M. (2022a). Have beliefs in conspiracy theories increased over time? PLoS One, 17(7), e0270429.

- Uscinski J., Enders A. M., Klofstad C., Stoler J. (2022b). Cause and effect: On the antecedents and consequences of conspiracy theory beliefs. Current Opinion in Psychology, 47, 101364.

- Van Duyn E., Collier J. (2019). Priming and fake news: The effects of elite discourse on evaluations of news media. Mass Communication and Society, 22(1), 29–48.

- Viner K. (2016). How technology disrupted the truth. The Guardian. [web]

- Vosoughi S., Roy D., Aral S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151.

- Wagner M. C., Boczkowski P. J. (2019). The reception of fake news: The interpretations and practices that shape the consumption of perceived misinformation. Digital Journalism, 7(7), 870–885.

- Wardle C. (2021). Broadcast news’ role in amplifying Trump’s tweets about election integrity. First Draft. [web]

- Watts D. J., Rothschild D. M. (2017). Don’t blame the election on fake news. Blame it on the media. Columbia Journalism Review, 5. [web]

- World Risk Poll. (2020). “Fake news” is the number one worry for internet users worldwide. [web]

- Ylä-Anttila T. (2018). Populist knowledge: “Post-truth” repertoires of contesting epistemic authorities. European Journal of Cultural and Political Sociology, 5(4), 356–388. [web]

[1] Roderic Day, “Masses, Elites, and Rebels: The Theory of ‘Brainwashing’” (2022). [web]

[2] Mitchell et al., 2019.

[3] Auxier, 2020.

[4] World Risk Poll, 2020.

[5] Benkler et al., 2018; Bennett & Livingston, 2020.

[6] Newman et al., 2020.

[7] Allen et al., 2020; Altay et al., 2022; Cordonier & Brest, 2021.

[8] Grinberg et al., 2019; Guess et al., 2019.

[9] Acerbi et al., 2022.

[10] Benkler et al., 2018; Bennett & Livingston, 2020; Tsfati et al., 2020.

[11] Guess et al., 2020; Mercier, 2020; Nyhan, 2020.

[12] Anderson, 2021; Carlson, 2020; Jungherr & Schroeder, 2021; Mitchelstein et al., 2020.

[13] Marwick, 2008.

[14] Orben, 2020.

[15] Cohen, 1972, p. 8.

[16] Cantril et al., 1940.

[17] Schwartz, 2015, p. 184.

[18] Anderson, 2021; Carlson, 2020; Jungherr & Schroeder, 2021; Mitchelstein et al., 2020.

[19] Allen et al., 2020.

[20] Boyd & Crawford, 2012; Kitchin, 2014; Tufekci, 2014.

[21] Livingstone, 2019.

[22] Viner, 2016.

[23] Schwartz, 2018.

[24] Nyhan, 2020.

[25] Allcott & Gentzkow, 2017.

[26] Acerbi, 2020.

[27] Bonhomme, 2016.

[28] Morin, 1969.

[29] Tufekci, 2014.

[30] Mellon & Prosser, 2017.

[31] McClain, 2021.

[32] Benkler et al., 2018; Tsfati et al., 2020.

[33] Wardle, 2021.

[34] E.g., Broockman & Kalla, 2022.

[35] BuzzFeed, 2016.

[36] Watts & Rothschild, 2017.

[37] Majid, 2021.

[38] Majid, 2021.

[39] Majid, 2021.

[40] Allen et al., 2020.

[41] Cordonier & Brest, 2021.

[42] Cordonier & Brest, 2021.

[43] Cordonier & Brest, 2021.

[44] Cordonier & Brest, 2021.

[45] Grinberg et al., 2019.

[46] Fletcher et al., 2018; Guess et al., 2020.

[47] Allen et al., 2020.

[48] Cordonier & Brest, 2021.

[49] Cordonier & Brest, 2021.

[50] Allen et al., 2021.

[51] Cointet et al., 2021.

[52] Vosoughi et al., 2018, p. 3.

[53] Fox, 2018.

[54] Berger, 2018.

[55] For instance, see Sinan Aral’s tweet. [web]

[56] Pulido et al., 2020.

[57] Bruns & Keller, 2020.

[58] Cinelli et al., 2020.

[59] For a review, see Tandoc et al., 2018.

[60] Rogers, 2020.

[61] Silverman, 2016.

[62] Rogers, 2020.

[63] Longhi, 2021.

[64] Rogers, 2021, p. 2.

[65] Athique, 2018, p. 59.

[66] Livingstone, 2019.

[67] Boyd & Crawford, 2012, p. 666.

[68] E.g., Altay et al., 2021.

[69] Altay et al., 2021.

[70] Acerbi, 2019; Berriche & Altay, 2020; Metzger et al., 2021; Osmundsen et al., 2021.

[71] 2019, p. 881.

[72] Lull, 1988; Morley, 1980.

[73] Livingstone, 2019.

[74] Fletcher & Nielsen, 2019.

[75] Wagner & Boczkowski, 2019.

[76] Tripodi, 2018.

[77] Ylä-Anttila, 2018.

[78] Marwick & Partin, 2022.

[79] Berriche, 2021.

[80] Acerbi et al., 2022.

[81] Altay, 2022.

[82] 2018.

[83] Graham, 2021.

[84] Luskin & Bullock, 2011.

[85] 2018.

[86] Clifford et al., 2019.

[87] Hill & Roberts, 2021; Krosnick, 2018.

[88] Clifford et al., 2019.

[89] Uscinski et al., 2022a.

[90] Nyhan, 2020.

[91] Bullock et al., 2013.

[92] Bullock et al., 2013; Prior et al., 2015.

[93] Schaffner & Luks, 2018, p. 136.

[94] Nyhan, 2020.

[95] Graham, 2020.

[96] Luskin et al., 2018.

[97] Graham, 2021.

[98] Graham, 2021.

[99] Allcott & Gentzkow, 2017.

[100] Islam et al., 2020.

[101] Paris Match Belgique, 2020.

[102] France info, 2020.

[103] Gharpure et al., 2020.

[104] Litman et al., 2020; reminiscent of the “lizardman’s constant” by Alexander, 2013.

[105] Alexander, 2013, p. 1.

[106] Guess et al., 2019, 2021.

[107] Kollmuss & Agyeman, 2002.

[108] Landry et al., 2018.

[109] Mercier, 2020; Mercier & Altay, 2022.

[110] 2011, p. 185.

[111] Uscinski et al., 2022b.

[112] Mercier & Altay, 2022; Uscinski et al., 2022b.

[113] Kim and Kim, 2019, p. 307.

[114] Kalla & Broockman, 2018.

[115] Karpf, 2019.

[116] Mercier, 2020.

[117] Claidière et al., 2014.

[118] Simon & Camargo, 2021.

[119] Lasswell, 1927.

[120] Anderson, 2021.

[121] Broockman & Kalla, 2022.

[122] Livingstone, 2019.

[123] Simon & Camargo, 2021.

[124] Uscinski et al., 2022b.

[125] Allen et al., 2020; Cordonier & Brest, 2021.

[126] Li & Wagner, 2020.

[127] Cushion et al., 2020.

[128] Altay et al., 2020; Jungherr & Schroeder, 2021; Miró-Llinares & Aguerri, 2021; Van Duyn & Collier, 2019.

[129] Altay & Acerbi, 2022.

[130] Ramaciotti Morales et al., 2022.